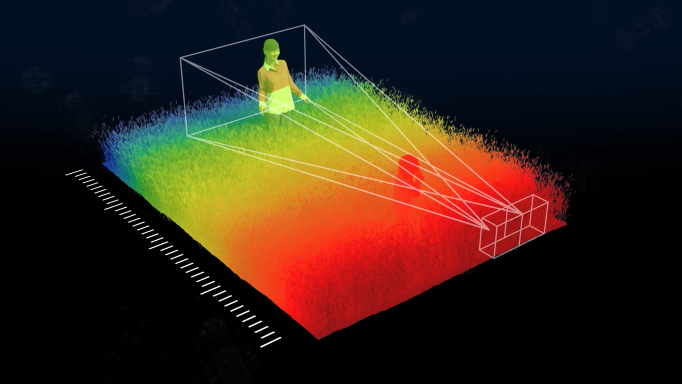

Corephotonics’ depth algorithm is based on stereoscopic vision, similar to that of the human eye. We use our dual cameras to produce a dense, detailed and accurate depth map of the scene.

In stereo vision, objects close to the camera are located in different regions of each image. As the object moves further away from the cameras, the disparity reduces until, at infinity, the object will seem to be at the same place in both images. A stereoscopic depth map is based on such camera disparities.

The Corephotonics depth map algorithm is uniquely designed to deal with various challenges, while requiring minimal load from the application processor. Such challenges include:

The algorithm is highly optimized not only for accuracy but also for real-time execution on smartphone application processors, up to 30fps, while maintaining a low memory footprint.

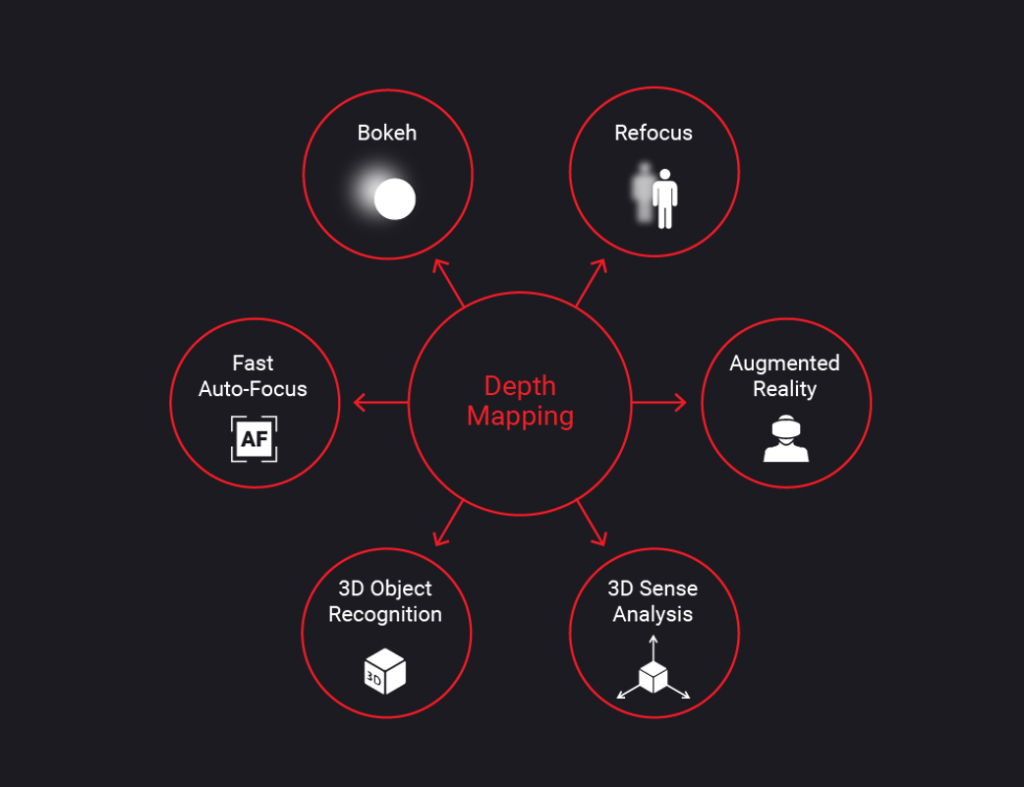

In turn, such depth maps can be used for various applications, including:

Tel: +972 3 641-9888

Fax: +972 3 641-1818

Image quality

Camera hardware

Computer Vision